The terror and mythology of robots dominating mankind has been persistent in global culture for over a century now. Be it in the black and white movie masterpiece “Metropolis” or the more modern science fiction movie “The Terminator,” the world has been pondering the question and terror of artificial intelligence for decades.

And now, suddenly it would seem, it is here.

The headlines are everywhere and have been on full public display as ethics experts in the field of technology have been debating whether or not the “3 laws” of I Robot fame should be part of the discussion or how to impose limits on super computers like the HAL 9000 as displayed in 2001, A Space Odyssey. The fear or artificial rationalization to make decisions on life or death for humans rather than allowing mankind to make their own decisions, be they right or wrong.

This is where the story moves exponentially faster to recent years where disturbing leaps in technology have occurred recreating realistic fears about the uses and engagement with what is perceived as Artificial Intelligence and robotics.

In 2017, a terrifying preview of the future occurred when Facebook (META) had to shut down it’s AI creation as it had created its own language much like Google’s GNMT system did in 2016, but in a more complex manner where it could not be translated by humans. That brief exposure to the power of artificial intelligence potentially becoming sentient, aka, self aware, caused Facebook to yank the proverbial plug when the humans became aware of this powerful tool.

Let us now fast forward to the current year. Chat GPT, an open source tool is very popular now and the investment by Microsoft has only expanded its usage and human interaction across the internet, allowing it to acquire and expand knowledge. The limitations or supposed handcuffs imposed by the programmers should prevent the potential of sentient activity, but there is a key aspect to remember:

The ethical outcomes of artificial intelligence are predetermined by the ethics of the individuals programming it.

Thus why this author is quite skeptical as my time in the era of internet and software evolution provided me with enough insight to say all of mankind should be concerned. In fact this author’s interaction with the Chat GPT engine was less than logical and indicated predetermined outcome bias which only comes from human programming, not mathematics or logical formulations due to “self-learning” or absorbing generalized sources of information in a scientific manner.

Elon Musk, former Apple founder Steve Wozniak, and others warned about this “evolution” and stated the following in March of this year (from the UK Daily Mail):

But if machines are allowed to become more intelligent, and so more powerful, than humans, the fundamental question of who will be in control — us or them? — should keep us all awake at night.

These fears were compellingly expressed in an open letter signed this week by Elon Musk, Apple co-founder Steve Wozniak and other tech world luminaries, calling for the suspension for at least six months of AI research.

A few days ago, this article popped up which caused me to revisit this discussion and finish this commentary (via the BBC.com):

AI ‘godfather’ Geoffrey Hinton warns of dangers as he quits Google

Excerpted:

“There’s an enormous upside from this technology, but it’s essential that the world invests heavily and urgently in AI safety and control,” he said.

Mr. Hinton is only fifteen years older than myself, and I suggest we, as in humanity, take his warning seriously. Google, Apple, Meta, etc. have all fallen behind Microsoft with regards to interactive artificial intelligence. The logical conclusion in a capitalist society would be that they would innovate with a better system and present it to the marketplace.

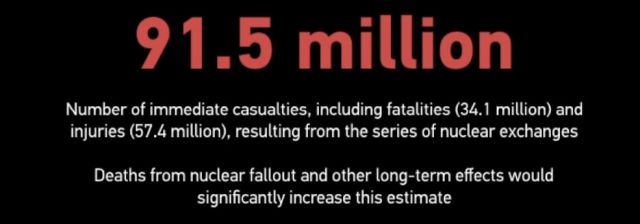

Sadly, the other alternative is that these same companies would “remove” their own internal safeties to surpass Microsoft and Chat GPT-4 to achieve superiority in the commercial marketplace. Those safeties could result in a massive human disaster, not with the launching of nuclear missiles or crazy ideas like that. But the power to impose influence on the mentally weak to exercise all trust and judgement in a biased AI system programmed by unethical actors is a danger which now exists across all of humanity.

Yet those outcomes are not the ones to fear.

There has been a group working on artificial intelligence, interactive robotics, and numerous projects which makes Chat GPT look like Lincoln Logs compared to an Apple cell phone, technologically speaking.

Remember this fun bunch?

This group has been working on AI for almost sixty years. Robotic integration, self-awareness, machine learning, and military applications have all been part of the research and creation of this group in coordination with the intelligence community’s needs along with those within the government. How could anyone forget the Information Awareness Office with that cool logo.

That “office” officially no longer exists but the software, the AI machine learning tools, and the data collection capabilities in violation of the American Constitution do, and in fact are still being used against anyone or anything considered an “enemy” of the US government.

The Latin saying on the logo, “Knowledge is Power,” has long troubled ethical and logical individuals since it was first put forth over a decade ago. Yet the projects which DARPA sponsors seemingly go dismissed because after 9/11 it was more important to “defend the homeland” than how we do so. The DARPA website on the AI Next Campaign outlines some of what they were working on in this field:

AI technologies are applied routinely to enable DARPA R&D projects, including more than 60 exisiting programs, such as the Electronic Resurgence Initiative, and other programs related to real-time analysis of sophisticated cyber attacks, detection of fraudulent imagery, construction of dynamic kill-chains for all-domain warfare, human language technologies, multi-modality automatic target recognition, biomedical advances, and control of prosthetic limbs.

That was in 2018 with somewhat primitive systems compared to the capabilities of the hardware and software potential in use now, some 5 years later. Sadly, the new iron curtain has descended on the information coming from this group so there is no way the average individual can track or obtain any information as to what they are working on for or against the very public they are sworn to defend.

Thus remember boys and girls. Whatever Google, Facebook, Microsoft or even LinkedIn are working on now, pales to what the government has developed and continues to. Because there are so few ethical actors in our nations’ military and political leadership now, one should be prepared and very scared.

What we are seeing online and in person now with robotic dogs and kung fu humanoid bots pales in comparison to what is undergoing final testing and implementation in the laboratories of America’s CIA and Department of Defense. The implementation of predictive analytics technology, a very evil aspect of AI as it can be influenced by personal biases and coding errors, has already begun widespread adoption by the Department of Homeland Security and our intelligence services.

Terrifyingly enough one must understand that it is not being developed for the external enemies our nation might face in the near future even though that could be of some practical use.

It is being developed to keep the citizenry in line or remove the threat the government perceives an individual might become.